Logos LIP Index

An IETF-style index of Logos-managed LIPs across Storage, Messaging, Blockchain and IFT-TS components. Use the filters below to jump straight to a specification.

About

The Logos LIP Index collects specifications maintained by IFT-TS across Messaging, Blockchain, and Storage. Each RFC documents a protocol, process, or system in a consistent, reviewable format.

This site is generated with mdBook from the repository: vacp2p/rfc-index.

Contributing

- Open a pull request against the repo.

- Add or update the RFC in the appropriate component folder.

- Include clear status and category metadata in the RFC header table.

If you are unsure where a document belongs, open an issue first and we will help route it.

We keep RFCs in Markdown within this repository; updates happen through pull requests.

Links

- IFT-TS: https://vac.dev

- IETF RFC Series: https://www.rfc-editor.org/

- Repository: https://github.com/vacp2p/rfc-index

Messaging LIPs

Logos Messaging builds a family of privacy-preserving, censorship-resistant communication protocols for web3 applications.

Contributors can visit Messaging LIPs for new Messaging specifications under discussion.

Waku Standards - Core

Core Waku protocol specifications, including messaging, peer discovery, and network primitives.

10/WAKU2

| Field | Value |

|---|---|

| Name | Waku v2 |

| Slug | 10 |

| Status | draft |

| Editor | Hanno Cornelius [email protected] |

| Contributors | Sanaz Taheri [email protected], Hanno Cornelius [email protected], Reeshav Khan [email protected], Daniel Kaiser [email protected], Oskar Thorén [email protected] |

Timeline

- 2026-01-16 —

f01d5b9— chore: fix links (#260) - 2026-01-16 —

89f2ea8— Chore/mdbook updates (#258) - 2025-12-22 —

0f1855e— Chore/fix headers (#239) - 2025-12-22 —

b1a5783— Chore/mdbook updates (#237) - 2025-12-18 —

d03e699— ci: add mdBook configuration (#233) - 2025-04-15 —

34aa3f3— Fix links 10/WAKU2 (#153) - 2025-04-09 —

cafa04f— 10/WAKU2: Update (#125) - 2024-11-20 —

ff87c84— Update Waku Links (#104) - 2024-09-13 —

3ab314d— Fix Files for Linting (#94) - 2024-03-21 —

2eaa794— Broken Links + Change Editors (#26) - 2024-02-01 —

8e14d58— Update waku2.md - 2024-02-01 —

6cf68fd— Update waku2.md - 2024-02-01 —

6734b16— Update waku2.md - 2024-01-31 —

356649a— Update and rename WAKU2.md to waku2.md - 2024-01-27 —

550238c— Rename README.md to WAKU2.md - 2024-01-27 —

eef961b— remove rfs folder - 2024-01-26 —

d6651b7— Update README.md - 2024-01-25 —

6e98666— Rename README.md to README.md - 2024-01-25 —

9b740d8— Rename waku/10/README.md to waku/specs/standards/core/10-WAKU2/README.md - 2024-01-24 —

330c35b— Create README.md

Abstract

Waku is a family of modular peer-to-peer protocols for secure communication. The protocols are designed to be secure, privacy-preserving, censorship-resistant and being able to run in resource-restricted environments. At a high level, it implements Pub/Sub over libp2p and adds a set of capabilities to it. These capabilities are things such as: (i) retrieving historical messages for mostly-offline devices (ii) adaptive nodes, allowing for heterogeneous nodes to contribute to the network (iii) preserving bandwidth usage for resource-restriced devices

This makes Waku ideal for running a p2p protocol on mobile devices and other similar restricted environments.

Historically, it has its roots in 6/WAKU1, which stems from Whisper, originally part of the Ethereum stack. However, Waku acts more as a thin wrapper for Pub/Sub and has a different API. It is implemented in an iterative manner where initial focus is on porting essential functionality to libp2p. See rough road map (2020) for more historical context.

Motivation and Goals

Waku, as a family of protocols, is designed to have a set of properties that are useful for many applications:

1.Useful for generalized messaging.

Many applications require some form of messaging protocol to communicate between different subsystems or different nodes. This messaging can be human-to-human, machine-to-machine or a mix. Waku is designed to work for all these scenarios.

2.Peer-to-peer.

Applications sometimes have requirements that make them suitable for peer-to-peer solutions:

- Censorship-resistant with no single point of failure

- Adaptive and scalable network

- Shared infrastructure

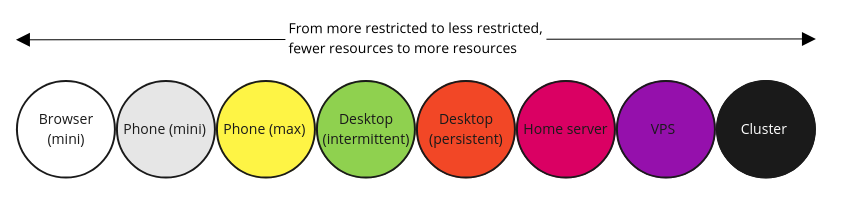

3.Runs anywhere.

Applications often run in restricted environments, where resources or the environment is restricted in some fashion. For example:

- Limited bandwidth, CPU, memory, disk, battery, etc.

- Not being publicly connectable

- Only being intermittently connected; mostly-offline

4.Privacy-preserving.

Applications often have a desire for some privacy guarantees, such as:

- Pseudonymity and not being tied to any personally identifiable information (PII)

- Metadata protection in transit

- Various forms of unlinkability, etc.

5.Modular design.

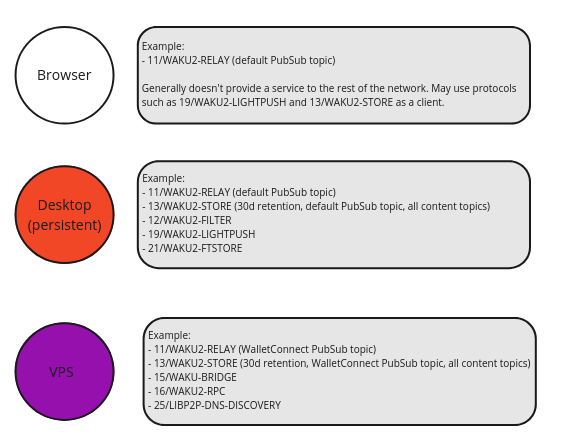

Applications often have different trade-offs when it comes to what properties they and their users value. Waku is designed in a modular fashion where an application protocol or node can choose what protocols they run. We call this concept adaptive nodes.

For example:

- Resource usage vs metadata protection

- Providing useful services to the network vs mostly using it

- Stronger guarantees for spam protection vs economic registration cost

For more on the concept of adaptive nodes and what this means in practice, please see the 30/ADAPTIVE-NODES spec.

Specification

The keywords “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in 2119.

Network Interaction Domains

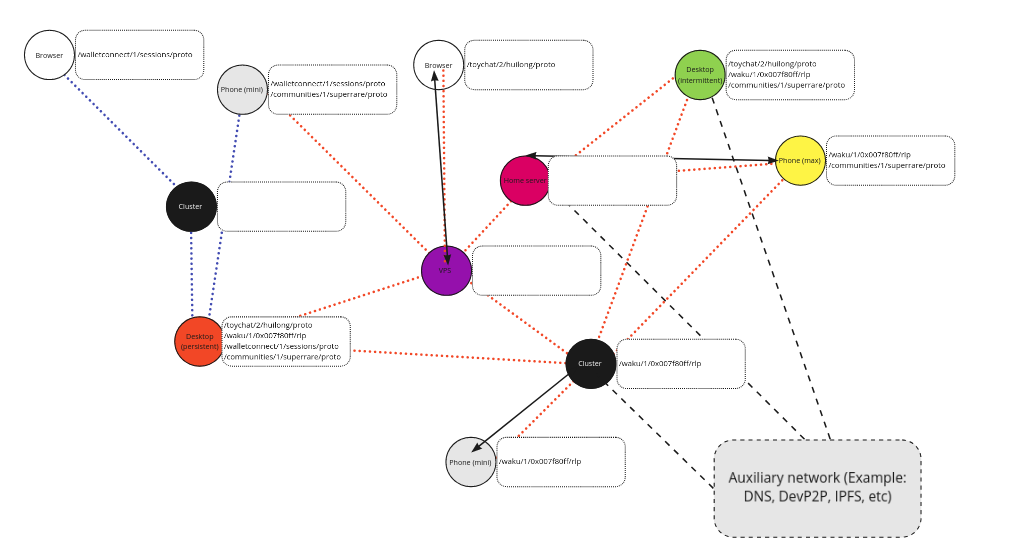

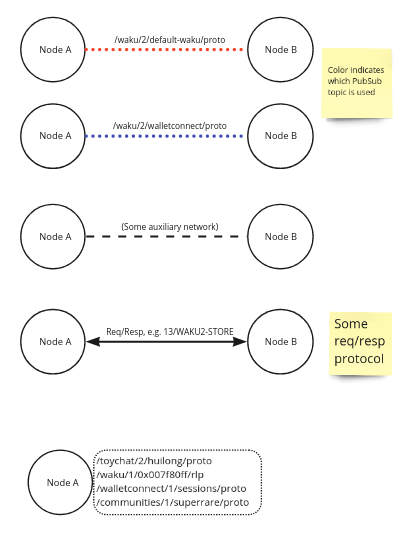

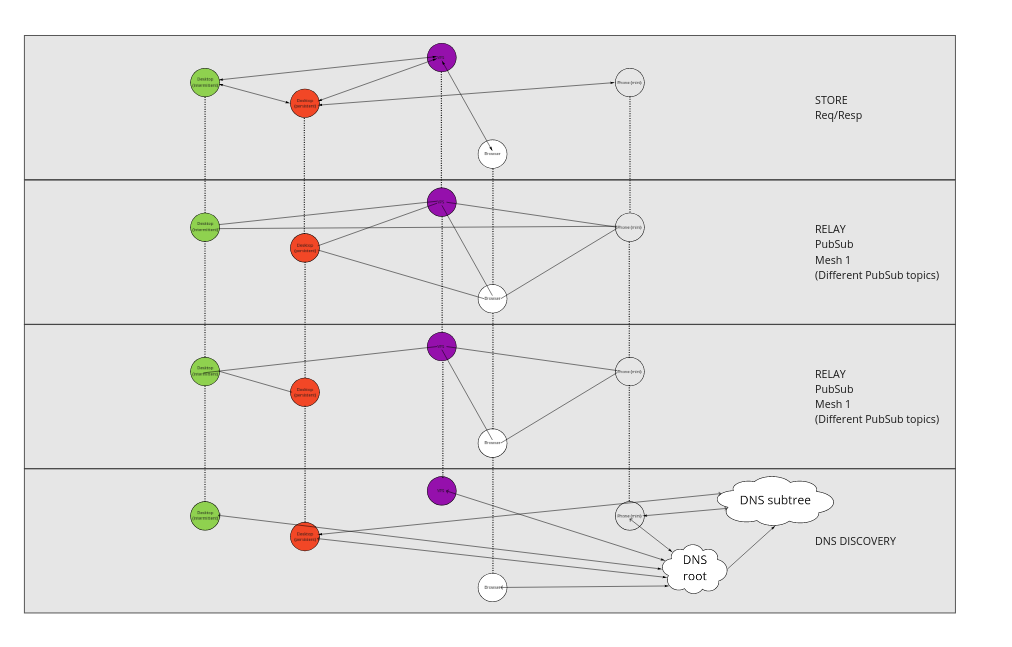

While Waku is best thought of as a single cohesive thing, there are three network interaction domains:

(a) gossip domain (b) discovery domain (c) request/response domain

Protocols and Identifiers

Since Waku is built on top of libp2p, many protocols have a libp2p protocol identifier. The current main protocol identifiers are:

/vac/waku/relay/2.0.0/vac/waku/store-query/3.0.0/vac/waku/filter/2.0.0-beta1/vac/waku/lightpush/2.0.0-beta1

This is in addition to protocols that specify messages, payloads, and recommended usages. Since these aren't negotiated libp2p protocols, they are referred to by their RFC ID. For example:

- 14/WAKU2-MESSAGE and 26/WAKU-PAYLOAD for message payloads

- 23/WAKU2-TOPICS and 27/WAKU2-PEERS for recommendations around usage

There are also more experimental libp2p protocols such as:

/vac/waku/waku-rln-relay/2.0.0-alpha1/vac/waku/peer-exchange/2.0.0-alpha1

The semantics of these protocols are referred to by RFC ID 17/WAKU2-RLN-RELAY and 34/WAKU2-PEER-EXCHANGE.

Use of libp2p and Protobuf

Unless otherwise specified, all protocols are implemented over libp2p and use Protobuf by default. Since messages are exchanged over a bi-directional binary stream, as a convention, libp2p protocols prefix binary message payloads with the length of the message in bytes. This length integer is encoded as a protobuf varint.

Gossip Domain

Waku is using gossiping to disseminate messages throughout the network.

Protocol identifier: /vac/waku/relay/2.0.0

See 11/WAKU2-RELAY specification for more details.

For an experimental privacy-preserving economic spam protection mechanism, see 17/WAKU2-RLN-RELAY.

See 23/WAKU2-TOPICS for more information about the recommended topic usage.

Direct use of libp2p protocols

In addition to /vac/waku/* protocols,

Waku MAY directly use the following libp2p protocols:

- libp2p ping protocol with protocol id

/ipfs/ping/1.0.0

for liveness checks between peers, or to keep peer-to-peer connections alive.

- libp2p identity and identity/push with protocol IDs

/ipfs/id/1.0.0

and

/ipfs/id/push/1.0.0

respectively, as basic means for capability discovery. These protocols are anyway used by the libp2p connection establishment layer Waku is built on. We plan to introduce a new IFT-TS capability discovery protocol with better anonymity properties and more functionality.

Transports

Waku is built in top of libp2p, and like libp2p it strives to be transport agnostic. We define a set of recommended transports in order to achieve a baseline of interoperability between clients. This section describes these recommended transports.

Waku client implementations SHOULD support the TCP transport. Where TCP is supported it MUST be enabled for both dialing and listening, even if other transports are available.

Waku nodes running in environments that do not allow the use of TCP directly, MAY use other transports.

A Waku node SHOULD support secure websockets for bidirectional communication streams, for example in a web browser context.

A node MAY support unsecure websockets if required by the application or running environment.

Discovery Domain

Discovery Methods

Waku can retrieve a list of nodes to connect to using DNS-based discovery as per EIP-1459. While this is a useful way of bootstrapping connection to a set of peers, it MAY be used in conjunction with an ambient peer discovery procedure to find other nodes to connect to, such as Node Discovery v5. It is possible to bypass the discovery domain by specifying static nodes.

Use of ENR

WAKU2-ENR

describes the usage of EIP-778 ENR (Ethereum Node Records)

for Waku discovery purposes.

It introduces two new ENR fields, multiaddrs and

waku2, that a Waku node MAY use for discovery purposes.

These fields MUST be used under certain conditions, as set out in the specification.

Both EIP-1459 DNS-based discovery and Node Discovery v5 operate on ENR,

and it's reasonable to expect even wider utility for ENR in Waku networks in the future.

Request/Response Domain

In addition to the Gossip domain, Waku provides a set of request/response protocols. They are primarily used in order to get Waku to run in resource restricted environments, such as low bandwidth or being mostly offline.

Historical Message Support

Protocol identifier*: /vac/waku/store-query/3.0.0

This is used to fetch historical messages for mostly offline devices. See 13/WAKU2-STORE spec specification for more details.

There is also an experimental fault-tolerant addition to the store protocol that relaxes the high availability requirement. See 21/WAKU2-FAULT-TOLERANT-STORE

Content Filtering

Protocol identifier*: /vac/waku/filter/2.0.0-beta1

This is used to preserve more bandwidth when fetching a subset of messages. See 12/WAKU2-FILTER specification for more details.

LightPush

Protocol identifier*: /vac/waku/lightpush/2.0.0-beta1

This is used for nodes with short connection windows and limited bandwidth to publish messages into the Waku network. See 19/WAKU2-LIGHTPUSH specification for more details.

Other Protocols

The above is a non-exhaustive list, and due to the modular design of Waku, there may be other protocols here that provide a useful service to the Waku network.

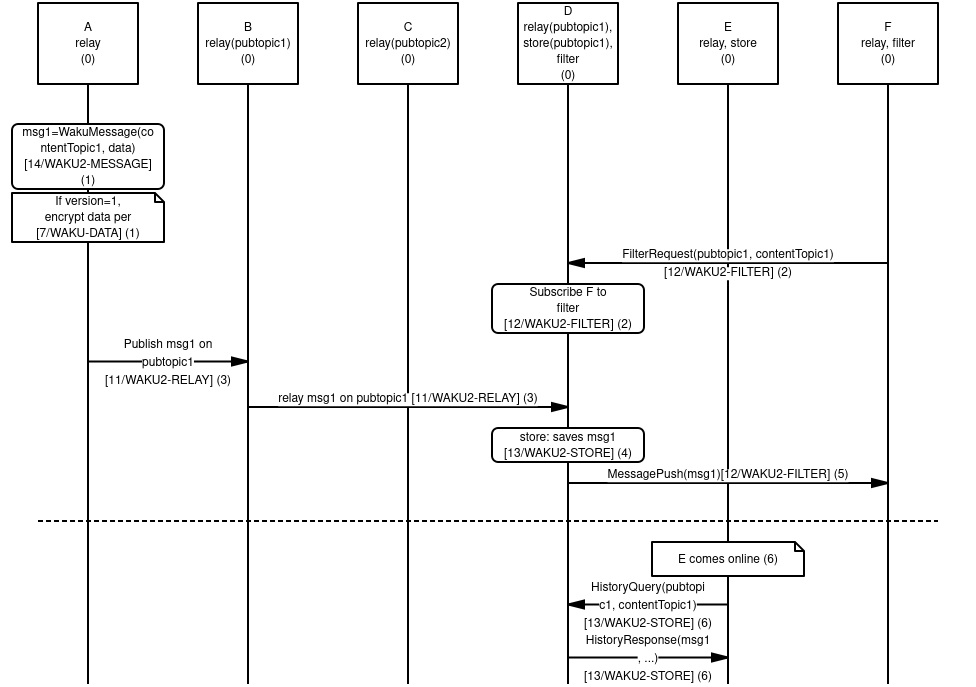

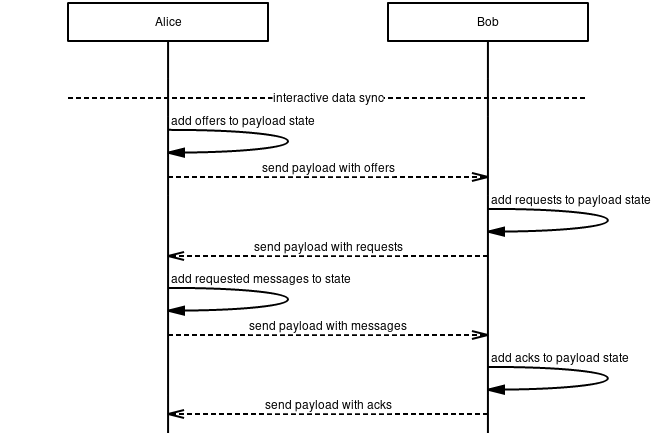

Overview of Protocol Interaction

See the sequence diagram below for an overview of how different protocols interact.

-

We have six nodes, A-F. The protocols initially mounted are indicated as such. The PubSub topics

pubtopic1andpubtopic2is used for routing and indicates that it is subscribed to messages on that topic for relay, see 11/WAKU2-RELAY for details. Ditto for 13/WAKU2-STORE where it indicates that these messages are persisted on that node. -

Node A creates a WakuMessage

msg1with a ContentTopiccontentTopic1. See 14/WAKU2-MESSAGE for more details. If WakuMessage version is set to 1, we use the 6/WAKU1 compatibledatafield with encryption. See 7/WAKU-DATA for more details. -

Node F requests to get messages filtered by PubSub topic

pubtopic1and ContentTopiccontentTopic1. Node D subscribes F to this filter and will in the future forward messages that match that filter. See 12/WAKU2-FILTER for more details. -

Node A publishes

msg1onpubtopic1and subscribes to that relay topic. It then gets relayed further from B to D, but not C since it doesn't subscribe to that topic. See 11/WAKU2-RELAY. -

Node D saves

msg1for possible later retrieval by other nodes. See 13/WAKU2-STORE. -

Node D also pushes

msg1to F, as it has previously subscribed F to this filter. See 12/WAKU2-FILTER. -

At a later time, Node E comes online. It then requests messages matching

pubtopic1andcontentTopic1from Node D. Node D responds with messages meeting this (and possibly other) criteria. See 13/WAKU2-STORE.

Appendix A: Upgradability and Compatibility

Compatibility with Waku Legacy

6/WAKU1 and Waku are different protocols all together. They use a different transport protocol underneath; 6/WAKU1 is devp2p RLPx based while Waku uses libp2p. The protocols themselves also differ as does their data format. Compatibility can be achieved only by using a bridge that not only talks both devp2p RLPx and libp2p, but that also transfers (partially) the content of a packet from one version to the other.

See 15/WAKU-BRIDGE for details on a bidirectional bridge mode.

Appendix B: Security

Each protocol layer of Waku provides a distinct service and is associated with a separate set of security features and concerns. Therefore, the overall security of Waku depends on how the different layers are utilized. In this section, we overview the security properties of Waku protocols against a static adversarial model which is described below. Note that a more detailed security analysis of each Waku protocol is supplied in its respective specification as well.

Primary Adversarial Model

In the primary adversarial model, we consider adversary as a passive entity that attempts to collect information from others to conduct an attack, but it does so without violating protocol definitions and instructions.

The following are not considered as part of the adversarial model:

- An adversary with a global view of all the peers and their connections.

- An adversary that can eavesdrop on communication links between arbitrary pairs of peers (unless the adversary is one end of the communication). Specifically, the communication channels are assumed to be secure.

Security Features

Pseudonymity

Waku by default guarantees pseudonymity for all of the protocol layers

since parties do not have to disclose their true identity

and instead they utilize libp2p PeerID as their identifiers.

While pseudonymity is an appealing security feature,

it does not guarantee full anonymity since the actions taken under the same pseudonym

i.e., PeerID can be linked together and

potentially result in the re-identification of the true actor.

Anonymity / Unlinkability

At a high level, anonymity is the inability of an adversary in linking an actor to its data/performed action (the actor and action are context-dependent). To be precise about linkability, we use the term Personally Identifiable Information (PII) to refer to any piece of data that could potentially be used to uniquely identify a party. For example, the signature verification key, and the hash of one's static IP address are unique for each user and hence count as PII. Notice that users' actions can be traced through their PIIs (e.g., signatures) and hence result in their re-identification risk. As such, we seek anonymity by avoiding linkability between actions and the actors / actors' PII. Concerning anonymity, Waku provides the following features:

Publisher-Message Unlinkability:

This feature signifies the unlinkability of a publisher

to its published messages in the 11/WAKU2-RELAY protocol.

The Publisher-Message Unlinkability

is enforced through the StrictNoSign policy due to which the data fields

of pubsub messages that count as PII for the publisher must be left unspecified.

Subscriber-Topic Unlinkability: This feature stands for the unlinkability of the subscriber to its subscribed topics in the 11/WAKU2-RELAY protocol. The Subscriber-Topic Unlinkability is achieved through the utilization of a single PubSub topic. As such, subscribers are not re-identifiable from their subscribed topic IDs as the entire network is linked to the same topic ID. This level of unlinkability / anonymity is known as k-anonymity where k is proportional to the system size (number of subscribers). Note that there is no hard limit on the number of the pubsub topics, however, the use of one topic is recommended for the sake of anonymity.

Spam protection

This property indicates that no adversary can flood the system

(i.e., publishing a large number of messages in a short amount of time),

either accidentally or deliberately, with any kind of message

i.e. even if the message content is valid or useful.

Spam protection is partly provided in 11/WAKU2-RELAY

through the scoring mechanism

provided for by GossipSub v1.1.

At a high level,

peers utilize a scoring function to locally score the behavior

of their connections and remove peers with a low score.

Data confidentiality, Integrity, and Authenticity

Confidentiality can be addressed through data encryption whereas integrity and authenticity are achievable through digital signatures. These features are provided for in 14/WAKU2-MESSAGE (version 1)` through payload encryption as well as encrypted signatures.

Security Considerations

Lack of anonymity/unlinkability in the protocols involving direct connections

including 13/WAKU2-STORE and 12/WAKU2-FILTER protocols:

The anonymity/unlinkability is not guaranteed in the protocols like 13/WAKU2-STORE

and 12/WAKU2-FILTER where peers need to have direct connections

to benefit from the designated service.

This is because during the direct connections peers utilize PeerID

to identify each other,

therefore the service obtained in the protocol is linkable

to the beneficiary's PeerID (which counts as PII).

For 13/WAKU2-STORE,

the queried node would be able to link the querying node's PeerID

to its queried topics.

Likewise, in the 12/WAKU2-FILTER,

a full node can link the light node's PeerIDs to its content filter.

Appendix C: Implementation Notes

Implementation Matrix

There are multiple implementations of Waku and its protocols:

Below you can find an overview of the specifications that they implement as they relate to Waku. This includes Waku legacy specifications, as they are used for bridging between the two networks.

| Spec | nim-waku (Nim) | go-waku (Go) | js-waku (Node JS) | js-waku (Browser JS) |

|---|---|---|---|---|

| 6/WAKU1 | ✔ | |||

| 7/WAKU-DATA | ✔ | ✔ | ||

| 8/WAKU-MAIL | ✔ | |||

| 9/WAKU-RPC | ✔ | |||

| 10/WAKU2 | ✔ | 🚧 | 🚧 | ✔ |

| 11/WAKU2-RELAY | ✔ | ✔ | ✔ | ✔ |

| 12/WAKU2-FILTER | ✔ | ✔ | ||

| 13/WAKU2-STORE | ✔ | ✔ | ✔* | ✔* |

| 14/WAKU2-MESSAGE) | ✔ | ✔ | ✔ | ✔ |

| 15/WAKU2-BRIDGE | ✔ | |||

| 16/WAKU2-RPC | ✔ | |||

| 17/WAKU2-RLN-RELAY | 🚧 | |||

| 18/WAKU2-SWAP | 🚧 | |||

| 19/WAKU2-LIGHTPUSH | ✔ | ✔ | ✔** | ✔** |

| 21/WAKU2-FAULT-TOLERANT-STORE | ✔ | ✔ |

*js-waku implements 13/WAKU2-STORE as a querying node only. **js-waku only implements 19/WAKU2-LIGHTPUSH requests.

Recommendations for Clients

To implement a minimal Waku client, we recommend implementing the following subset in the following order:

- 10/WAKU2 - this specification

- 11/WAKU2-RELAY - for basic operation

- 14/WAKU2-MESSAGE - version 0 (unencrypted)

- 13/WAKU2-STORE - for historical messaging (query mode only)

To get compatibility with Waku Legacy:

- 7/WAKU-DATA

- 14/WAKU2-MESSAGE - version 1 (encrypted with

7/WAKU-DATA)

For an interoperable keep-alive mechanism:

- libp2p ping protocol, with periodic pings to connected peers

Appendix D: Future work

The following features are currently experimental, under research and initial implementations:

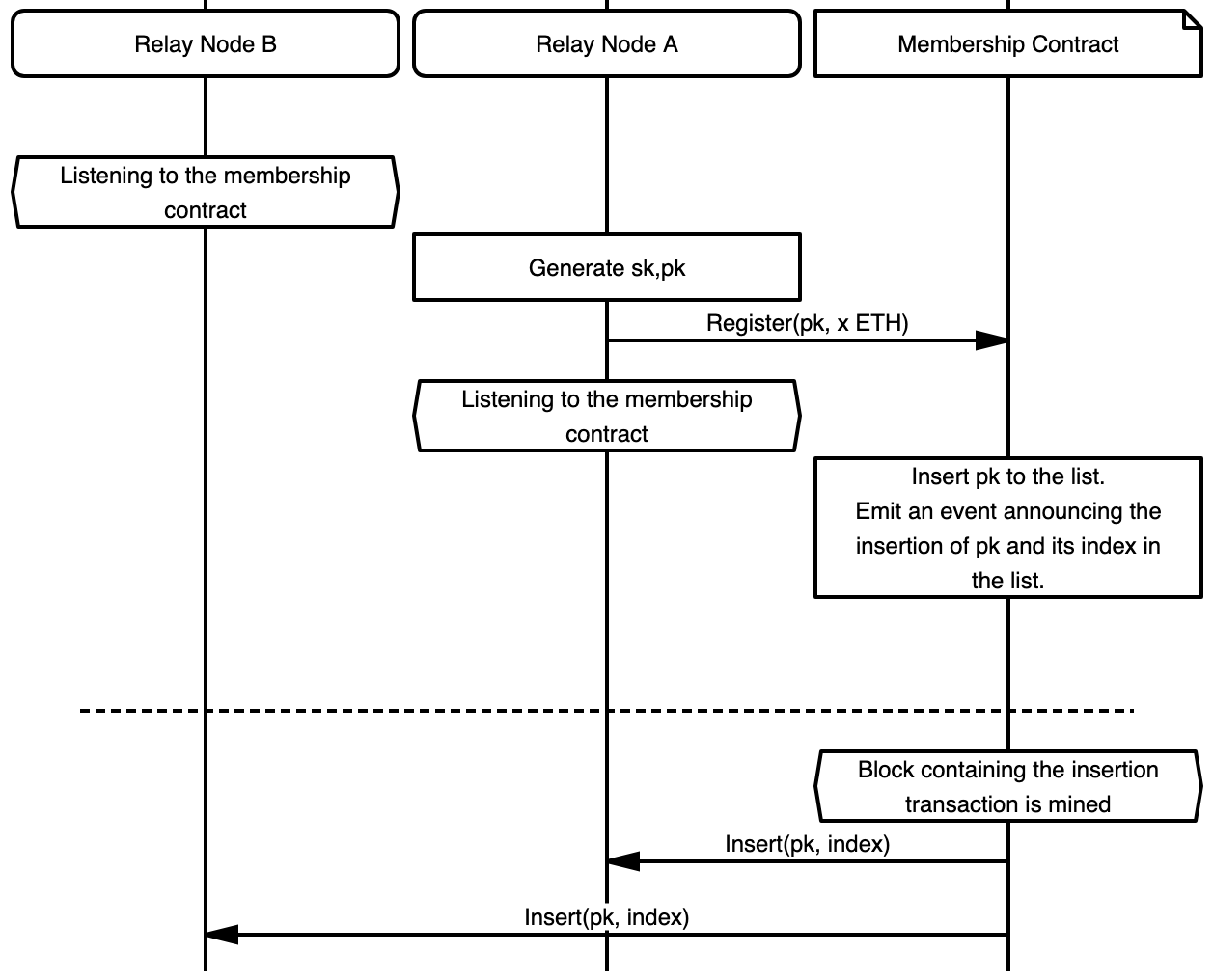

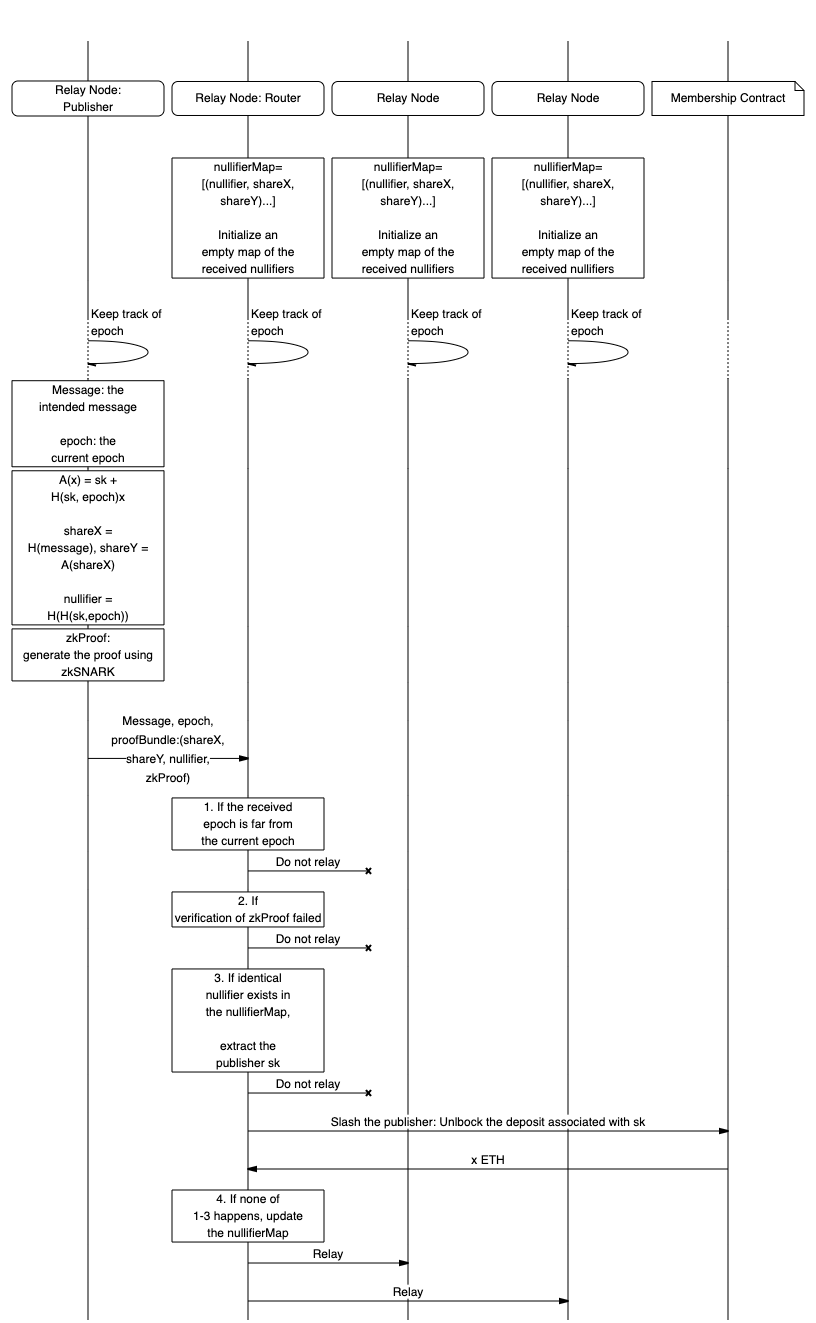

Economic Spam Resistance:

We aim to enable an incentivized spam protection technique

to enhance 11/WAKU2-RELAY by using rate limiting nullifiers.

More details on this can be found in 17/WAKU2-RLN-RELAY.

In this advanced method,

peers are limited to a certain rate of messaging per epoch and

an immediate financial penalty is enforced for spammers who break this rate.

Prevention of Denial of Service (DoS) and Node Incentivization:

Denial of service signifies the case where an adversarial node

exhausts another node's service capacity (e.g., by making a large number of requests)

and makes it unavailable to the rest of the system.

DoS attack is to be mitigated through the accounting model as described in 18/WAKU2-SWAP.

In a nutshell, peers have to pay for the service they obtain from each other.

In addition to incentivizing the service provider,

accounting also makes DoS attacks costly for malicious peers.

The accounting model can be used in 13/WAKU2-STORE and

12/WAKU2-FILTER to protect against DoS attacks.

Additionally, this gives node operators who provide a useful service to the network an incentive to perform that service. See 18/WAKU2-SWAP for more details on this piece of work.

Copyright

Copyright and related rights waived via CC0.

References

11/WAKU2-RELAY

| Field | Value |

|---|---|

| Name | Waku v2 Relay |

| Slug | 11 |

| Status | stable |

| Editor | Hanno Cornelius [email protected] |

| Contributors | Oskar Thorén [email protected], Sanaz Taheri [email protected] |

Timeline

- 2026-01-16 —

f01d5b9— chore: fix links (#260) - 2026-01-16 —

89f2ea8— Chore/mdbook updates (#258) - 2025-12-22 —

0f1855e— Chore/fix headers (#239) - 2025-12-22 —

b1a5783— Chore/mdbook updates (#237) - 2025-12-18 —

d03e699— ci: add mdBook configuration (#233) - 2024-09-13 —

3ab314d— Fix Files for Linting (#94) - 2024-02-01 —

b346ad2— Update relay.md - 2024-02-01 —

0904a8b— Update and rename RELAY.md to relay.md - 2024-01-27 —

8ff46fa— Rename WAKU2-RELAY.md to RELAY.md - 2024-01-27 —

4c4591c— Rename README.md to WAKU2-RELAY.md - 2024-01-27 —

eef961b— remove rfs folder - 2024-01-25 —

6874961— Create README.md

11/WAKU2-RELAY specifies a Publish/Subscribe approach

to peer-to-peer messaging with a strong focus on privacy,

censorship-resistance, security and scalability.

Its current implementation is a minor extension of the

libp2p GossipSub protocol

and prescribes gossip-based dissemination.

As such the scope is limited to defining a separate

protocol id

for 11/WAKU2-RELAY, establishing privacy and security requirements,

and defining how the underlying GossipSub is to be interpreted and

implemented within the Waku and cryptoeconomic domain.

11/WAKU2-RELAY should not be confused with libp2p circuit relay.

Protocol identifier: /vac/waku/relay/2.0.0

Security Requirements

The 11/WAKU2-RELAY protocol is designed to provide the following security properties

under a static Adversarial Model.

Note that data confidentiality, integrity, and

authenticity are currently considered out of scope for 11/WAKU2-RELAY and

must be handled by higher layer protocols such as 14/WAKU2-MESSAGE.

-

Publisher-Message Unlinkability: This property indicates that no adversarial entity can link a published

Messageto its publisher. This feature also implies the unlinkability of the publisher to its published topic ID as theMessageembodies the topic IDs. -

Subscriber-Topic Unlinkability: This feature stands for the inability of any adversarial entity from linking a subscriber to its subscribed topic IDs.

Terminology

Personally identifiable information (PII) refers to any piece of data that can be used to uniquely identify a user. For example, the signature verification key, and the hash of one's static IP address are unique for each user and hence count as PII.

Adversarial Model

- Any entity running the

11/WAKU2-RELAYprotocol is considered an adversary. This includes publishers, subscribers, and all the peers' direct connections. Furthermore, we consider the adversary as a passive entity that attempts to collect information from others to conduct an attack but it does so without violating protocol definitions and instructions. For example, under the passive adversarial model, no malicious subscriber hides the messages it receives from other subscribers as it is against the description of11/WAKU2-RELAY. However, a malicious subscriber may learn which topics are subscribed to by which peers. - The following are not considered as part of the adversarial model:

- An adversary with a global view of all the peers and their connections.

- An adversary that can eavesdrop on communication links between arbitrary pairs of peers (unless the adversary is one end of the communication). In other words, the communication channels are assumed to be secure.

Wire Specification

The PubSub interface specification

defines the protobuf RPC messages

exchanged between peers participating in a GossipSub network.

We republish these messages here for ease of reference and

define how 11/WAKU2-RELAY uses and interprets each field.

Protobuf definitions

The PubSub RPC messages are specified using protocol buffers v2

syntax = "proto2";

message RPC {

repeated SubOpts subscriptions = 1;

repeated Message publish = 2;

message SubOpts {

optional bool subscribe = 1;

optional string topicid = 2;

}

message Message {

optional string from = 1;

optional bytes data = 2;

optional bytes seqno = 3;

repeated string topicIDs = 4;

optional bytes signature = 5;

optional bytes key = 6;

}

}

NOTE: The various control messages defined for GossipSub are used as specified there. NOTE: The

TopicDescriptoris not currently used by11/WAKU2-RELAY.

Message fields

The Message protobuf defines the format in which content is relayed between peers.

11/WAKU2-RELAY specifies the following usage requirements for each field:

-

The

fromfield MUST NOT be used, following theStrictNoSignsignature policy. -

The

datafield MUST be filled out with aWakuMessage. See14/WAKU2-MESSAGEfor more details. -

The

seqnofield MUST NOT be used, following theStrictNoSignsignature policy. -

The

topicIDsfield MUST contain the content-topics that a message is being published on. -

The

signaturefield MUST NOT be used, following theStrictNoSignsignature policy. -

The

keyfield MUST NOT be used, following theStrictNoSignsignature policy.

SubOpts fields

The SubOpts protobuf defines the format

in which subscription options are relayed between peers.

A 11/WAKU2-RELAY node MAY decide to subscribe or

unsubscribe from topics by sending updates using SubOpts.

The following usage requirements apply:

-

The

subscribefield MUST contain a boolean, wheretrueindicates subscribe andfalseindicates unsubscribe to a topic. -

The

topicidfield MUST contain the pubsub topic.

Note: The

topicidrefering to pubsub topic andtopicIdrefering to content-topic are detailed in 23/WAKU2-TOPICS.

Signature Policy

The StrictNoSign option

MUST be used, to ensure that messages are built without the signature,

key, from and seqno fields.

Note that this does not merely imply that these fields be empty, but

that they MUST be absent from the marshalled message.

Security Analysis

- Publisher-Message Unlinkability:

To address publisher-message unlinkability,

one should remove any PII from the published message.

As such,

11/WAKU2-RELAYfollows theStrictNoSignpolicy as described in libp2p PubSub specs. As the result of theStrictNoSignpolicy,Messages should be built without thefrom,signatureandkeyfields since each of these three fields individually counts as PII for the author of the message (one can link the creation of the message with libp2p peerId and thus indirectly with the IP address of the publisher). Note that removing identifiable information from messages cannot lead to perfect unlinkability. The direct connections of a publisher might be able to figure out whichMessages belong to that publisher by analyzing its traffic. The possibility of such inference may get higher when thedatafield is also not encrypted by the upper-level protocols.

- Subscriber-Topic Unlinkability:

To preserve subscriber-topic unlinkability,

it is recommended by

10/WAKU2to use a single PubSub topic in the11/WAKU2-RELAYprotocol. This allows an immediate subscriber-topic unlinkability where subscribers are not re-identifiable from their subscribed topic IDs as the entire network is linked to the same topic ID. This level of unlinkability / anonymity is known as k-anonymity where k is proportional to the system size (number of participants of Waku relay protocol). However, note that11/WAKU2-RELAYsupports the use of more than one topic. In case that more than one topic id is utilized, preserving unlinkability is the responsibility of the upper-level protocols which MAY adopt partitioned topics technique to achieve K-anonymity for the subscribed peers.

Future work

- Economic spam resistance:

In the spam-protected

11/WAKU2-RELAYprotocol, no adversary can flood the system with spam messages (i.e., publishing a large number of messages in a short amount of time). Spam protection is partly provided by GossipSub v1.1 through scoring mechanism. At a high level, peers utilize a scoring function to locally score the behavior of their connections and remove peers with a low score.11/WAKU2-RELAYaims at enabling an advanced spam protection mechanism with economic disincentives by utilizing Rate Limiting Nullifiers. In a nutshell, peers must conform to a certain message publishing rate per a system-defined epoch, otherwise, they get financially penalized for exceeding the rate. More details on this new technique can be found in17/WAKU2-RLN-RELAY.

- Providing Unlinkability, Integrity and Authenticity simultaneously:

Integrity and authenticity are typically addressed through digital signatures and

Message Authentication Code (MAC) schemes, however,

the usage of digital signatures (where each signature is bound to a particular peer)

contradicts with the unlinkability requirement

(messages signed under a certain signature key are verifiable by a verification key

that is bound to a particular publisher).

As such, integrity and authenticity are missing features in

11/WAKU2-RELAYin the interest of unlinkability. In future work, advanced signature schemes like group signatures can be utilized to enable authenticity, integrity, and unlinkability simultaneously. In a group signature scheme, a member of a group can anonymously sign a message on behalf of the group as such the true signer is indistinguishable from other group members.

Copyright

Copyright and related rights waived via CC0.

References

12/WAKU2-FILTER

| Field | Value |

|---|---|

| Name | Waku v2 Filter |

| Slug | 12 |

| Status | draft |

| Editor | Hanno Cornelius [email protected] |

| Contributors | Dean Eigenmann [email protected], Oskar Thorén [email protected], Sanaz Taheri [email protected], Ebube Ud [email protected] |

Timeline

- 2026-01-16 —

f01d5b9— chore: fix links (#260) - 2026-01-16 —

89f2ea8— Chore/mdbook updates (#258) - 2025-12-22 —

0f1855e— Chore/fix headers (#239) - 2025-12-22 —

b1a5783— Chore/mdbook updates (#237) - 2025-12-18 —

d03e699— ci: add mdBook configuration (#233) - 2025-03-25 —

e8a3f8a— 12/WAKU2-FILTER: Update (#119) - 2024-09-13 —

3ab314d— Fix Files for Linting (#94) - 2024-02-01 —

e4d8f27— Update and rename FILTER.md to filter.md - 2024-01-27 —

046a3b7— Rename WAKU2-FILTER.md to FILTER.md - 2024-01-27 —

57124a7— Rename README.md to WAKU2-FILTER.md - 2024-01-27 —

eef961b— remove rfs folder - 2024-01-25 —

940d795— Rename waku/12/README.md to waku/rfcs/standards/core/12/README.md - 2024-01-22 —

420adf1— Vac RFC index initial structure

Protocol identifiers:

- filter-subscribe:

/vac/waku/filter-subscribe/2.0.0-beta1 - filter-push:

/vac/waku/filter-push/2.0.0-beta1

Abstract

This specification describes the 12/WAKU2-FILTER protocol,

which enables a client to subscribe to a subset of real-time messages from a Waku peer.

This is a more lightweight version of 11/WAKU2-RELAY,

useful for bandwidth restricted devices.

This is often used by nodes with lower resource limits to subscribe to full Relay nodes and

only receive the subset of messages they desire,

based on content topic interest.

Motivation

Unlike the 13/WAKU2-STORE protocol for historical messages, this protocol allows for native lower latency scenarios, such as instant messaging. It is thus complementary to it.

Strictly speaking, it is not just doing basic request-response, but performs sender push based on receiver intent. While this can be seen as a form of light publish/subscribe, it is only used between two nodes in a direct fashion. Unlike the Gossip domain, this is suitable for light nodes which put a premium on bandwidth. No gossiping takes place.

It is worth noting that a light node could get by with only using the 13/WAKU2-STORE protocol to query for a recent time window, provided it is acceptable to do frequent polling.

Semantics

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in 2119.

Content filtering

Content filtering is a way to do

message-based filtering.

Currently the only content filter being applied is on contentTopic.

Terminology

The term Personally identifiable information (PII) refers to any piece of data that can be used to uniquely identify a user. For example, the signature verification key, and the hash of one's static IP address are unique for each user and hence count as PII.

Protobuf

syntax = "proto3";

// Protocol identifier: /vac/waku/filter-subscribe/2.0.0-beta1

message FilterSubscribeRequest {

enum FilterSubscribeType {

SUBSCRIBER_PING = 0;

SUBSCRIBE = 1;

UNSUBSCRIBE = 2;

UNSUBSCRIBE_ALL = 3;

}

string request_id = 1;

FilterSubscribeType filter_subscribe_type = 2;

// Filter criteria

optional string pubsub_topic = 10;

repeated string content_topics = 11;

}

message FilterSubscribeResponse {

string request_id = 1;

uint32 status_code = 10;

optional string status_desc = 11;

}

// Protocol identifier: /vac/waku/filter-push/2.0.0-beta1

message MessagePush {

WakuMessage waku_message = 1;

optional string pubsub_topic = 2;

}

Filter-Subscribe

A filter service node MUST support the filter-subscribe protocol to allow filter clients to subscribe, modify, refresh and unsubscribe a desired set of filter criteria. The combination of different filter criteria for a specific filter client node is termed a "subscription". A filter client is interested in receiving messages matching the filter criteria in its registered subscriptions.

Since a filter service node is consuming resources to provide this service, it MAY account for usage and adapt its service provision to certain clients.

Filter Subscribe Request

A client node MUST send all filter requests in a FilterSubscribeRequest message.

This request MUST contain a request_id.

The request_id MUST be a uniquely generated string.

Each request MUST include a filter_subscribe_type, indicating the type of request.

Filter Subscribe Response

When responding to a FilterSubscribeRequest,

a filter service node SHOULD send a FilterSubscribeResponse

with a requestId matching that of the request.

This response MUST contain a status_code indicating if the request was successful

or not.

Successful status codes are in the 2xx range.

Client nodes SHOULD consider all other status codes as error codes and

assume that the requested operation had failed.

In addition,

the filter service node MAY choose to provide a more detailed status description

in the status_desc field.

Filter matching

In the description of each request type below,

the term "filter criteria" refers to the combination of pubsub_topic and

a set of content_topics.

The request MAY include filter criteria,

conditional to the selected filter_subscribe_type.

If the request contains filter criteria,

it MUST contain a pubsub_topic

and the content_topics set MUST NOT be empty.

A 14/WAKU2-MESSAGE matches filter criteria

when its content_topic is in the content_topics set

and it was published on a matching pubsub_topic.

Filter Subscribe Types

The filter-subscribe types are defined as follows:

SUBSCRIBER_PING

A filter client that sends a FilterSubscribeRequest with

filter_subscribe_type set to SUBSCRIBER_PING,

requests that the filter service node SHOULD indicate if it has any active subscriptions

for this client.

The filter client SHOULD exclude any filter criteria from the request.

The filter service node SHOULD respond with a success status_code

if it has any active subscriptions for this client

or an error status_code if not.

The filter service node SHOULD ignore any filter criteria in the request.

SUBSCRIBE

A filter client that sends a FilterSubscribeRequest with

filter_subscribe_type set to SUBSCRIBE

requests that the filter service node SHOULD push messages

matching this filter to the client.

The filter client MUST include the desired filter criteria in the request.

A client MAY use this request type to modify an existing subscription

by providing additional filter criteria in a new request.

A client MAY use this request type to refresh an existing subscription

by providing the same filter criteria in a new request.

The filter service node SHOULD respond with a success status_code

if it successfully honored this request

or an error status_code if not.

The filter service node SHOULD respond with an error status_code and

discard the request if the FilterSubscribeRequest

does not contain valid filter criteria,

i.e. both a pubsub_topic and a non-empty content_topics set.

UNSUBSCRIBE

A filter client that sends a FilterSubscribeRequest with

filter_subscribe_type set to UNSUBSCRIBE

requests that the service node SHOULD stop pushing messages

matching this filter to the client.

The filter client MUST include the filter criteria

it desires to unsubscribe from in the request.

A client MAY use this request type to modify an existing subscription

by providing a subset of the original filter criteria

to unsubscribe from in a new request.

The filter service node SHOULD respond with a success status_code

if it successfully honored this request

or an error status_code if not.

The filter service node SHOULD respond with an error status_code and

discard the request if the unsubscribe request does not contain valid filter criteria,

i.e. both a pubsub_topic and a non-empty content_topics set.

UNSUBSCRIBE_ALL

A filter client that sends a FilterSubscribeRequest with

filter_subscribe_type set to UNSUBSCRIBE_ALL

requests that the service node SHOULD stop pushing messages

matching any filter to the client.

The filter client SHOULD exclude any filter criteria from the request.

The filter service node SHOULD remove any existing subscriptions for this client.

It SHOULD respond with a success status_code if it successfully honored this request

or an error status_code if not.

Filter-Push

A filter client node MUST support the filter-push protocol to allow filter service nodes to push messages matching registered subscriptions to this client.

A filter service node SHOULD push all messages

matching the filter criteria in a registered subscription

to the subscribed filter client.

These WakuMessages

are likely to come from 11/WAKU2-RELAY,

but there MAY be other sources or protocols where this comes from.

This is up to the consumer of the protocol.

If a message push fails,

the filter service node MAY consider the client node to be unreachable.

If a specific filter client node is not reachable from the service node

for a period of time,

the filter service node MAY choose to stop pushing messages to the client and

remove its subscription.

This period is up to the service node implementation.

It is RECOMMENDED to set 1 minute as a reasonable default.

Message Push

Each message MUST be pushed in a MessagePush message.

Each MessagePush MUST contain one (and only one) waku_message.

If this message was received on a specific pubsub_topic,

it SHOULD be included in the MessagePush.

A filter client SHOULD NOT respond to a MessagePush.

Since the filter protocol does not include caching or fault-tolerance,

this is a best effort push service with no bundling

or guaranteed retransmission of messages.

A filter client SHOULD verify that each MessagePush it receives

originated from a service node where the client has an active subscription

and that it matches filter criteria belonging to that subscription.

Adversarial Model

Any node running the WakuFilter protocol

i.e., both the subscriber node and

the queried node are considered as an adversary.

Furthermore, we consider the adversary as a passive entity

that attempts to collect information from other nodes to conduct an attack but

it does so without violating protocol definitions and instructions.

For example, under the passive adversarial model,

no malicious node intentionally hides the messages

matching to one's subscribed content filter

as it is against the description of the WakuFilter protocol.

The following are not considered as part of the adversarial model:

- An adversary with a global view of all the nodes and their connections.

- An adversary that can eavesdrop on communication links between arbitrary pairs of nodes (unless the adversary is one end of the communication). In specific, the communication channels are assumed to be secure.

Security Considerations

Note that while using WakuFilter allows light nodes to save bandwidth,

it comes with a privacy cost in the sense that they need to

disclose their liking topics to the full nodes to retrieve the relevant messages.

Currently, anonymous subscription is not supported by the WakuFilter, however,

potential solutions in this regard are discussed below.

Future Work

Anonymous filter subscription:

This feature guarantees that nodes can anonymously subscribe for a message filter

(i.e., without revealing their exact content filter).

As such, no adversary in the WakuFilter protocol

would be able to link nodes to their subscribed content filers.

The current version of the WakuFilter protocol does not provide anonymity

as the subscribing node has a direct connection to the full node and

explicitly submits its content filter to be notified about the matching messages.

However, one can consider preserving anonymity through one of the following ways:

- By hiding the source of the subscription i.e., anonymous communication. That is the subscribing node shall hide all its PII in its filter request e.g., its IP address. This can happen by the utilization of a proxy server or by using Tor

Note that the current structure of filter requests

i.e., FilterRPC does not embody any piece of PII, otherwise,

such data fields must be treated carefully to achieve anonymity.

- By deploying secure 2-party computations in which the subscribing node obtains the messages matching a content filter whereas the full node learns nothing about the content filter as well as the messages pushed to the subscribing node. Examples of such 2PC protocols are Oblivious Transfers and one-way Private Set Intersections (PSI).

Copyright

Copyright and related rights waived via CC0.

Previous versions

References

Informative

12/WAKU2-FILTER

| Field | Value |

|---|---|

| Name | Waku v2 Filter |

| Slug | 12 |

| Status | draft |

| Editor | Hanno Cornelius [email protected] |

| Contributors | Dean Eigenmann [email protected], Oskar Thorén [email protected], Sanaz Taheri [email protected], Ebube Ud [email protected] |

Timeline

- 2026-01-21 —

a00f16e— chore: mdbook fixes (#265) - 2026-01-16 —

f01d5b9— chore: fix links (#260) - 2026-01-16 —

89f2ea8— Chore/mdbook updates (#258) - 2025-12-22 —

0f1855e— Chore/fix headers (#239) - 2025-12-22 —

b1a5783— Chore/mdbook updates (#237) - 2025-12-18 —

d03e699— ci: add mdBook configuration (#233) - 2025-03-25 —

e8a3f8a— 12/WAKU2-FILTER: Update (#119) - 2024-09-13 —

3ab314d— Fix Files for Linting (#94) - 2024-02-05 —

d41f106— Update filter.md - 2024-02-05 —

8436a31— Update and rename README.md to filter.md - 2024-01-27 —

eef961b— remove rfs folder - 2024-01-25 —

420a51b— Rename waku/rfcs/core/12/previous-versions00/README.md to waku/rfcs/standards/core/12/previous-versions00/README.md - 2024-01-25 —

755fea9— Rename waku/12/previous-versions/00/README.md to waku/rfcs/core/12/previous-versions00/README.md - 2024-01-22 —

420adf1— Vac RFC index initial structure

WakuFilter is a protocol that enables subscribing to messages that a peer receives.

This is a more lightweight version of WakuRelay

specifically designed for bandwidth restricted devices.

This is due to the fact that light nodes subscribe to full-nodes and

only receive the messages they desire.

Content filtering

Protocol identifier*: /vac/waku/filter/2.0.0-beta1

Content filtering is a way to do message-based

filtering.

Currently the only content filter being applied is on contentTopic. This

corresponds to topics in Waku v1.

Rationale

Unlike the store protocol for historical messages, this protocol allows for

native lower latency scenarios such as instant messaging. It is thus

complementary to it.

Strictly speaking, it is not just doing basic request response, but performs sender push based on receiver intent. While this can be seen as a form of light pub/sub, it is only used between two nodes in a direct fashion. Unlike the Gossip domain, this is meant for light nodes which put a premium on bandwidth. No gossiping takes place.

It is worth noting that a light node could get by with only using the store

protocol to query for a recent time window, provided it is acceptable to do

frequent polling.

Design Requirements

The effectiveness and reliability of the content filtering service

enabled by WakuFilter protocol rely on the high availability of the full nodes

as the service providers.

To this end, full nodes must feature high uptime

(to persistently listen and capture the network messages)

as well as high Bandwidth (to provide timely message delivery to the light nodes).

Security Consideration

Note that while using WakuFilter allows light nodes to save bandwidth,

it comes with a privacy cost in the sense that they need to disclose their liking

topics to the full nodes to retrieve the relevant messages.

Currently, anonymous subscription is not supported by the WakuFilter, however,

potential solutions in this regard are sketched below in Future Work

section.

Terminology

The term Personally identifiable information (PII) refers to any piece of data that can be used to uniquely identify a user. For example, the signature verification key, and the hash of one's static IP address are unique for each user and hence count as PII.

Adversarial Model

Any node running the WakuFilter protocol i.e.,

both the subscriber node and the queried node are considered as an adversary.

Furthermore, we consider the adversary as a passive entity

that attempts to collect information from other nodes to conduct an attack but

it does so without violating protocol definitions and instructions.

For example, under the passive adversarial model,

no malicious node intentionally hides the messages matching

to one's subscribed content filter as it is against the description

of the WakuFilter protocol.

The following are not considered as part of the adversarial model:

- An adversary with a global view of all the nodes and their connections.

- An adversary that can eavesdrop on communication links between arbitrary pairs of nodes (unless the adversary is one end of the communication). In specific, the communication channels are assumed to be secure.

Protobuf

message FilterRequest {

bool subscribe = 1;

string topic = 2;

repeated ContentFilter contentFilters = 3;

message ContentFilter {

string contentTopic = 1;

}

}

message MessagePush {

repeated WakuMessage messages = 1;

}

message FilterRPC {

string requestId = 1;

FilterRequest request = 2;

MessagePush push = 3;

}

FilterRPC

A node MUST send all Filter messages (FilterRequest, MessagePush)

wrapped inside a FilterRPC this allows the node handler

to determine how to handle a message as the Waku Filter protocol

is not a request response based protocol but instead a push based system.

The requestId MUST be a uniquely generated string. When a MessagePush is sent

the requestId MUST match the requestId of the subscribing FilterRequest

whose filters matched the message causing it to be pushed.

FilterRequest

A FilterRequest contains an optional topic, zero or more content filters and

a boolean signifying whether to subscribe or unsubscribe to the given filters.

True signifies 'subscribe' and false signifies 'unsubscribe'.

A node that sends the RPC with a filter request and subscribe set to 'true'

requests that the filter node SHOULD notify the light requesting node of messages

matching this filter.

A node that sends the RPC with a filter request and subscribe set to 'false'

requests that the filter node SHOULD stop notifying the light requesting node

of messages matching this filter if it is currently doing so.

The filter matches when content filter and, optionally, a topic is matched.

Content filter is matched when a WakuMessage contentTopic field is the same.

A filter node SHOULD honor this request, though it MAY choose not to do so. If it chooses not to do so it MAY tell the light why. The mechanism for doing this is currently not specified. For notifying the light node a filter node sends a MessagePush message.

Since such a filter node is doing extra work for a light node, it MAY also account for usage and be selective in how much service it provides. This mechanism is currently planned but underspecified.

MessagePush

A filter node that has received a filter request SHOULD push all messages that

match this filter to a light node. These WakuMessage's

are likely to come from the

relay protocol and be kept at the Node, but there MAY be other sources or

protocols where this comes from. This is up to the consumer of the protocol.

A filter node MUST NOT send a push message for messages that have not been requested via a FilterRequest.

If a specific light node isn't connected to a filter node for some specific period of time (e.g. a TTL), then the filter node MAY choose to not push these messages to the node. This period is up to the consumer of the protocol and node implementation, though a reasonable default is one minute.

Future Work

Anonymous filter subscription:

This feature guarantees that nodes can anonymously subscribe for a message filter

(i.e., without revealing their exact content filter).

As such, no adversary in the WakuFilter protocol would be able to link nodes

to their subscribed content filers.

The current version of the WakuFilter protocol does not provide anonymity

as the subscribing node has a direct connection to the full node and

explicitly submits its content filter to be notified about the matching messages.

However, one can consider preserving anonymity through one of the following ways:

- By hiding the source of the subscription i.e., anonymous communication. That is the subscribing node shall hide all its PII in its filter request e.g., its IP address. This can happen by the utilization of a proxy server or by using Tor

Note that the current structure of filter requests i.e.,

FilterRPC does not embody any piece of PII, otherwise,

such data fields must be treated carefully to achieve anonymity.

- By deploying secure 2-party computations in which the subscribing node obtains the messages matching a content filter whereas the full node learns nothing about the content filter as well as the messages pushed to the subscribing node. Examples of such 2PC protocols are Oblivious Transfers and one-way Private Set Intersections (PSI).

Copyright

Copyright and related rights waived via CC0.

References

13/WAKU2-STORE

| Field | Value |

|---|---|

| Name | Waku Store Query |

| Slug | 13 |

| Status | draft |

| Editor | Hanno Cornelius [email protected] |

| Contributors | Dean Eigenmann [email protected], Oskar Thorén [email protected], Aaryamann Challani [email protected], Sanaz Taheri [email protected] |

Timeline

- 2026-01-16 —

f01d5b9— chore: fix links (#260) - 2026-01-16 —

89f2ea8— Chore/mdbook updates (#258) - 2025-12-22 —

0f1855e— Chore/fix headers (#239) - 2025-12-22 —

b1a5783— Chore/mdbook updates (#237) - 2025-12-18 —

d03e699— ci: add mdBook configuration (#233) - 2025-04-15 —

1b8b2ac— Add missing status to 13/WAKU-STORE (#149) - 2025-02-03 —

a60a2c4— 13/WAKU-STORE: Update (#124) - 2024-09-13 —

3ab314d— Fix Files for Linting (#94) - 2024-08-05 —

eb25cd0— chore: replace email addresses (#86) - 2024-03-21 —

2eaa794— Broken Links + Change Editors (#26) - 2024-02-01 —

755be94— Update and rename STORE.md to store.md - 2024-01-27 —

3baed07— Rename README.md to STORE.md - 2024-01-27 —

eef961b— remove rfs folder - 2024-01-25 —

51e2879— Create README.md

Abstract

This specification explains the WAKU2-STORE protocol,

which enables querying of 14/WAKU2-MESSAGEs.

Protocol identifier*: /vac/waku/store-query/3.0.0

Terminology

The term PII, Personally Identifiable Information, refers to any piece of data that can be used to uniquely identify a user. For example, the signature verification key, and the hash of one's static IP address are unique for each user and hence count as PII.

Wire Specification

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC2119.

Design Requirements

The concept of ephemeral messages introduced in 14/WAKU2-MESSAGE affects WAKU2-STORE as well.

Nodes running WAKU2-STORE SHOULD support ephemeral messages as specified in 14/WAKU2-MESSAGE.

Nodes running WAKU2-STORE SHOULD NOT store messages with the ephemeral flag set to true.

Payloads

syntax = "proto3";

// Protocol identifier: /vac/waku/store-query/3.0.0

package waku.store.v3;

import "waku/message/v1/message.proto";

message WakuMessageKeyValue {

optional bytes message_hash = 1; // Globally unique key for a Waku Message

// Full message content and associated pubsub_topic as value

optional waku.message.v1.WakuMessage message = 2;

optional string pubsub_topic = 3;

}

message StoreQueryRequest {

string request_id = 1;

bool include_data = 2; // Response should include full message content

// Filter criteria for content-filtered queries

optional string pubsub_topic = 10;

repeated string content_topics = 11;

optional sint64 time_start = 12;

optional sint64 time_end = 13;

// List of key criteria for lookup queries

repeated bytes message_hashes = 20; // Message hashes (keys) to lookup

// Pagination info. 50 Reserved

optional bytes pagination_cursor = 51; // Message hash (key) from where to start query (exclusive)

bool pagination_forward = 52;

optional uint64 pagination_limit = 53;

}

message StoreQueryResponse {

string request_id = 1;

optional uint32 status_code = 10;

optional string status_desc = 11;

repeated WakuMessageKeyValue messages = 20;

optional bytes pagination_cursor = 51;

}

General Store Query Concepts

Waku Message Key-Value Pairs

The store query protocol operates as a query protocol for a key-value store of historical messages,

with each entry having a 14/WAKU2-MESSAGE

and associated pubsub_topic as the value,

and deterministic message hash as the key.

The store can be queried to return either a set of keys or a set of key-value pairs.

Within the store query protocol,

the 14/WAKU2-MESSAGE keys and

values MUST be represented in a WakuMessageKeyValue message.

This message MUST contain the deterministic message_hash as the key.

It MAY contain the full 14/WAKU2-MESSAGE and

associated pubsub topic as the value in the message and

pubsub_topic fields, depending on the use case as set out below.

If the message contains a value entry in addition to the key,

both the message and pubsub_topic fields MUST be populated.

The message MUST NOT have either message or pubsub_topic populated with the other unset.

Both fields MUST either be set or unset.

Waku Message Store Eligibility

In order for a message to be eligible for storage:

- it MUST be a valid 14/WAKU2-MESSAGE.

- the

timestampfield MUST be populated with the Unix epoch time, at which the message was generated in nanoseconds. If at the time of storage thetimestampdeviates by more than 20 seconds either into the past or the future when compared to the store node’s internal clock, the store node MAY reject the message. - the

ephemeralfield MUST be set tofalse.

Waku message sorting

The key-value entries in the store MUST be time-sorted by the 14/WAKU2-MESSAGE timestamp attribute.

Where two or more key-value entries have identical timestamp values,

the entries MUST be further sorted by the natural order of their message hash keys.

Within the context of traversing over key-value entries in the store,

"forward" indicates traversing the entries in ascending order,

whereas "backward" indicates traversing the entries in descending order.

Pagination

If a large number of entries in the store service node match the query criteria provided in a StoreQueryRequest,

the client MAY make use of pagination

in a chain of store query request and response transactions

to retrieve the full response in smaller batches termed "pages".

Pagination can be performed either in a forward or backward direction.

A store query client MAY indicate the maximum number of matching entries it wants in the StoreQueryResponse,

by setting the page size limit in the pagination_limit field.

Note that a store service node MAY enforce its own limit

if the pagination_limit is unset

or larger than the service node's internal page size limit.

A StoreQueryResponse with a populated pagination_cursor indicates that more stored entries match the query than included in the response.

A StoreQueryResponse without a populated pagination_cursor indicates that

there are no more matching entries in the store.

The client MAY request the next page of entries from the store service node

by populating a subsequent StoreQueryRequest with the pagination_cursor

received in the StoreQueryResponse.

All other fields and query criteria MUST be the same as in the preceding StoreQueryRequest.

A StoreQueryRequest without a populated pagination_cursor indicates that

the client wants to retrieve the "first page" of the stored entries matching the query.

Store Query Request

A client node MUST send all historical message queries within a StoreQueryRequest message.

This request MUST contain a request_id.

The request_id MUST be a uniquely generated string.

If the store query client requires the store service node to include 14/WAKU2-MESSAGE values in the query response,

it MUST set include_data to true.

If the store query client requires the store service node to return only message hash keys in the query response,

it SHOULD set include_data to false.

By default, therefore, the store service node assumes include_data to be false.

A store query client MAY include query filter criteria in the StoreQueryRequest.

There are two types of filter use cases:

- Content filtered queries and

- Message hash lookup queries

Content filtered queries

A store query client MAY request the store service node to filter historical entries by a content filter. Such a client MAY create a filter on content topic, on time range or on both.

To filter on content topic,

the client MUST populate both the pubsub_topic and content_topics field.

The client MUST NOT populate either pubsub_topic or

content_topics and leave the other unset.

Both fields MUST either be set or unset.

A mixed content topic filter with just one of either pubsub_topic or

content_topics set, SHOULD be regarded as an invalid request.

To filter on time range, the client MUST set time_start, time_end or both.

Each time_ field should contain a Unix epoch timestamp in nanoseconds.

An unset time_start SHOULD be interpreted as "from the oldest stored entry".

An unset time_end SHOULD be interpreted as "up to the youngest stored entry".

If any of the content filter fields are set,

namely pubsub_topic, content_topic, time_start, or time_end,

the client MUST NOT set the message_hashes field.

Message hash lookup queries

A store query client MAY request the store service node to filter historical entries by one or more matching message hash keys. This type of query acts as a "lookup" against a message hash key or set of keys already known to the client.

In order to perform a lookup query,

the store query client MUST populate the message_hashes field with the list of message hash keys it wants to lookup in the store service node.

If the message_hashes field is set,

the client MUST NOT set any of the content filter fields,

namely pubsub_topic, content_topic, time_start, or time_end.

Presence queries

A presence query is a special type of lookup query that allows a client to check for the presence of one or more messages in the store service node, without retrieving the full contents (values) of the messages. This can, for example, be used as part of a reliability mechanism, whereby store query clients verify that previously published messages have been successfully stored.

In order to perform a presence query,

the store query client MUST populate the message_hashes field in the StoreQueryRequest with the list of message hashes

for which it wants to verify presence in the store service node.

The include_data property MUST be set to false.

The client SHOULD interpret every message_hash returned in the messages field of the StoreQueryResponse as present in the store.

The client SHOULD assume that all other message hashes included in the original StoreQueryRequest but

not in the StoreQueryResponse is not present in the store.

Pagination info

The store query client MAY include a message hash as pagination_cursor,

to indicate at which key-value entry a store service node SHOULD start the query.

The pagination_cursor is treated as exclusive

and the corresponding entry will not be included in subsequent store query responses.

For forward queries,

only messages following (see sorting) the one indexed at pagination_cursor

will be returned.

For backward queries,

only messages preceding (see sorting) the one indexed at pagination_cursor

will be returned.

If the store query client requires the store service node to perform a forward query,

it MUST set pagination_forward to true.

If the store query client requires the store service node to perform a backward query,

it SHOULD set pagination_forward to false.

By default, therefore, the store service node assumes pagination to be backward.

A store query client MAY indicate the maximum number of matching entries it wants in the StoreQueryResponse,

by setting the page size limit in the pagination_limit field.

Note that a store service node MAY enforce its own limit

if the pagination_limit is unset

or larger than the service node's internal page size limit.

See pagination for more on how the pagination info is used in store transactions.

Store Query Response

In response to any StoreQueryRequest,

a store service node SHOULD respond with a StoreQueryResponse with a requestId matching that of the request.

This response MUST contain a status_code indicating if the request was successful or not.

Successful status codes are in the 2xx range.

A client node SHOULD consider all other status codes as error codes and

assume that the requested operation had failed.

In addition,

the store service node MAY choose to provide a more detailed status description in the status_desc field.

Filter matching

For content filtered queries,

an entry in the store service node matches the filter criteria in a StoreQueryRequest if each of the following conditions are met:

- its

content_topicis in the requestcontent_topicsset and it was published on a matchingpubsub_topicOR the requestcontent_topicsandpubsub_topicfields are unset - its

timestampis larger or equal than the requeststart_timeOR the requeststart_timeis unset - its

timestampis smaller than the requestend_timeOR the requestend_timeis unset

Note that for content filtered queries, start_time is treated as inclusive and

end_time is treated as exclusive.

For message hash lookup queries,

an entry in the store service node matches the filter criteria if its message_hash is in the request message_hashes set.

The store service node SHOULD respond with an error code and discard the request if the store query request contains both content filter criteria and message hashes.

Populating response messages

The store service node SHOULD populate the messages field in the response

only with entries matching the filter criteria provided in the corresponding request.

Regardless of whether the response is to a forward or backward query,

the messages field in the response MUST be ordered in a forward direction

according to the message sorting rules.

If the corresponding StoreQueryRequest has include_data set to true,

the service node SHOULD populate both the message_hash and

message for each entry in the response.

In all other cases,

the store service node SHOULD populate only the message_hash field for each entry in the response.

Paginating the response

The response SHOULD NOT contain more messages than the pagination_limit provided in the corresponding StoreQueryRequest.

It is RECOMMENDED that the store node defines its own maximum page size internally.

If the pagination_limit in the request is unset,

or exceeds this internal maximum page size,

the store service node SHOULD ignore the pagination_limit field and

apply its own internal maximum page size.

In response to a forward StoreQueryRequest:

- if the

pagination_cursoris set, the store service node SHOULD populate themessagesfield with matching entries following thepagination_cursor(exclusive). - if the

pagination_cursoris unset, the store service node SHOULD populate themessagesfield with matching entries from the first entry in the store. - if there are still more matching entries in the store

after the maximum page size is reached while populating the response,

the store service node SHOULD populate the

pagination_cursorin theStoreQueryResponsewith the message hash key of the last entry included in the response.

In response to a backward StoreQueryRequest:

- if the

pagination_cursoris set, the store service node SHOULD populate themessagesfield with matching entries preceding thepagination_cursor(exclusive). - if the

pagination_cursoris unset, the store service node SHOULD populate themessagesfield with matching entries from the last entry in the store. - if there are still more matching entries in the store

after the maximum page size is reached while populating the response,

the store service node SHOULD populate the

pagination_cursorin theStoreQueryResponsewith the message hash key of the first entry included in the response.

Security Consideration

The main security consideration while using this protocol is that a querying node has to reveal its content filters of interest to the queried node, hence potentially compromising their privacy.

Adversarial Model

Any peer running the WAKU2-STORE protocol, i.e.

both the querying node and the queried node, are considered as an adversary.

Furthermore,

we currently consider the adversary as a passive entity that attempts to collect information from other peers to conduct an attack but

it does so without violating protocol definitions and instructions.

As we evolve the protocol,

further adversarial models will be considered.

For example, under the passive adversarial model,

no malicious node hides or

lies about the history of messages as it is against the description of the WAKU2-STORE protocol.

The following are not considered as part of the adversarial model:

- An adversary with a global view of all the peers and their connections.

- An adversary that can eavesdrop on communication links between arbitrary pairs of peers (unless the adversary is one end of the communication). Specifically, the communication channels are assumed to be secure.

Future Work

-

Anonymous query: This feature guarantees that nodes can anonymously query historical messages from other nodes i.e., without disclosing the exact topics of 14/WAKU2-MESSAGE they are interested in.

As such, no adversary in theWAKU2-STOREprotocol would be able to learn which peer is interested in which content filters i.e., content topics of 14/WAKU2-MESSAGE. The current version of theWAKU2-STOREprotocol does not provide anonymity for historical queries, as the querying node needs to directly connect to another node in theWAKU2-STOREprotocol and explicitly disclose the content filters of its interest to retrieve the corresponding messages. However, one can consider preserving anonymity through one of the following ways: -

By hiding the source of the request i.e., anonymous communication. That is the querying node shall hide all its PII in its history request e.g., its IP address. This can happen by the utilization of a proxy server or by using Tor. Note that the current structure of historical requests does not embody any piece of PII, otherwise, such data fields must be treated carefully to achieve query anonymity.

- By deploying secure 2-party computations in which the querying node obtains the historical messages of a certain topic, the queried node learns nothing about the query. Examples of such 2PC protocols are secure one-way Private Set Intersections (PSI).

- Robust and verifiable timestamps: Messages timestamp is a way to show that

the message existed prior to some point in time.

However, the lack of timestamp verifiability can create room for a range of attacks,

including injecting messages with invalid timestamps pointing to the far future.

To better understand the attack,

consider a store node whose current clock shows

2021-01-01 00:00:30(and assume all the other nodes have a synchronized clocks +-20seconds). The store node already has a list of messages,(m1,2021-01-01 00:00:00), (m2,2021-01-01 00:00:01), ..., (m10:2021-01-01 00:00:20), that are sorted based on their timestamp.

An attacker sends a message with an arbitrary large timestamp e.g., 10 hours ahead of the correct clock(m',2021-01-01 10:00:30). The store node placesm'at the end of the list,(m1,2021-01-01 00:00:00), (m2,2021-01-01 00:00:01), ..., (m10:2021-01-01 00:00:20), (m',2021-01-01 10:00:30). Now another message arrives with a valid timestamp e.g.,(m11, 2021-01-01 00:00:45). However, since its timestamp precedes the malicious messagem', it gets placed beforem'in the list i.e.,(m1,2021-01-01 00:00:00), (m2,2021-01-01 00:00:01), ..., (m10:2021-01-01 00:00:20), (m11, 2021-01-01 00:00:45), (m',2021-01-01 10:00:30). In fact, for the next 10 hours,m'will always be considered as the most recent message and served as the last message to the querying nodes irrespective of how many other messages arrive afterward.

A robust and verifiable timestamp allows the receiver of a message to verify that a message has been generated prior to the claimed timestamp. One solution is the use of open timestamps e.g., block height in Blockchain-based timestamps. That is, messages contain the most recent block height perceived by their senders at the time of message generation. This proves accuracy within a range of minutes (e.g., in Bitcoin blockchain) or seconds (e.g., in Ethereum 2.0) from the time of origination.

Copyright

Copyright and related rights waived via CC0.

Previous versions

References

13/WAKU2-STORE

| Field | Value |

|---|---|

| Name | Waku v2 Store |

| Slug | 13 |

| Status | draft |

| Editor | Simon-Pierre Vivier [email protected] |

| Contributors | Dean Eigenmann [email protected], Oskar Thorén [email protected], Aaryamann Challani [email protected], Sanaz Taheri [email protected], Hanno Cornelius [email protected] |

Timeline

- 2026-01-16 —

f01d5b9— chore: fix links (#260) - 2026-01-16 —

89f2ea8— Chore/mdbook updates (#258) - 2025-12-22 —

0f1855e— Chore/fix headers (#239) - 2025-12-22 —

b1a5783— Chore/mdbook updates (#237) - 2025-12-18 —

d03e699— ci: add mdBook configuration (#233) - 2025-02-03 —

a60a2c4— 13/WAKU-STORE: Update (#124) - 2024-09-13 —

3ab314d— Fix Files for Linting (#94) - 2024-08-05 —

eb25cd0— chore: replace email addresses (#86) - 2024-03-21 —

2eaa794— Broken Links + Change Editors (#26) - 2024-02-01 —

755be94— Update and rename STORE.md to store.md - 2024-01-27 —

3baed07— Rename README.md to STORE.md - 2024-01-27 —

eef961b— remove rfs folder - 2024-01-25 —

51e2879— Create README.md

Abstract

This specification explains the 13/WAKU2-STORE protocol

which enables querying of messages received through the relay protocol and

stored by other nodes.

It also supports pagination for more efficient querying of historical messages.

Protocol identifier*: /vac/waku/store/2.0.0-beta4

Terminology

The term PII, Personally Identifiable Information, refers to any piece of data that can be used to uniquely identify a user. For example, the signature verification key, and the hash of one's static IP address are unique for each user and hence count as PII.

Design Requirements

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC2119.

Nodes willing to provide the storage service using 13/WAKU2-STORE protocol,

SHOULD provide a complete and full view of message history.

As such, they are required to be highly available and

specifically have a high uptime to consistently receive and store network messages.

The high uptime requirement makes sure that no message is missed out

hence a complete and intact view of the message history

is delivered to the querying nodes.

Nevertheless, in case storage provider nodes cannot afford high availability,

the querying nodes may retrieve the historical messages from multiple sources

to achieve a full and intact view of the past.

The concept of ephemeral messages introduced in

14/WAKU2-MESSAGE affects 13/WAKU2-STORE as well.

Nodes running 13/WAKU2-STORE SHOULD support ephemeral messages as specified in

14/WAKU2-MESSAGE.

Nodes running 13/WAKU2-STORE SHOULD NOT store messages